Multilayer aritifical neural networks (ANN)

ReLU : rectified linear unit

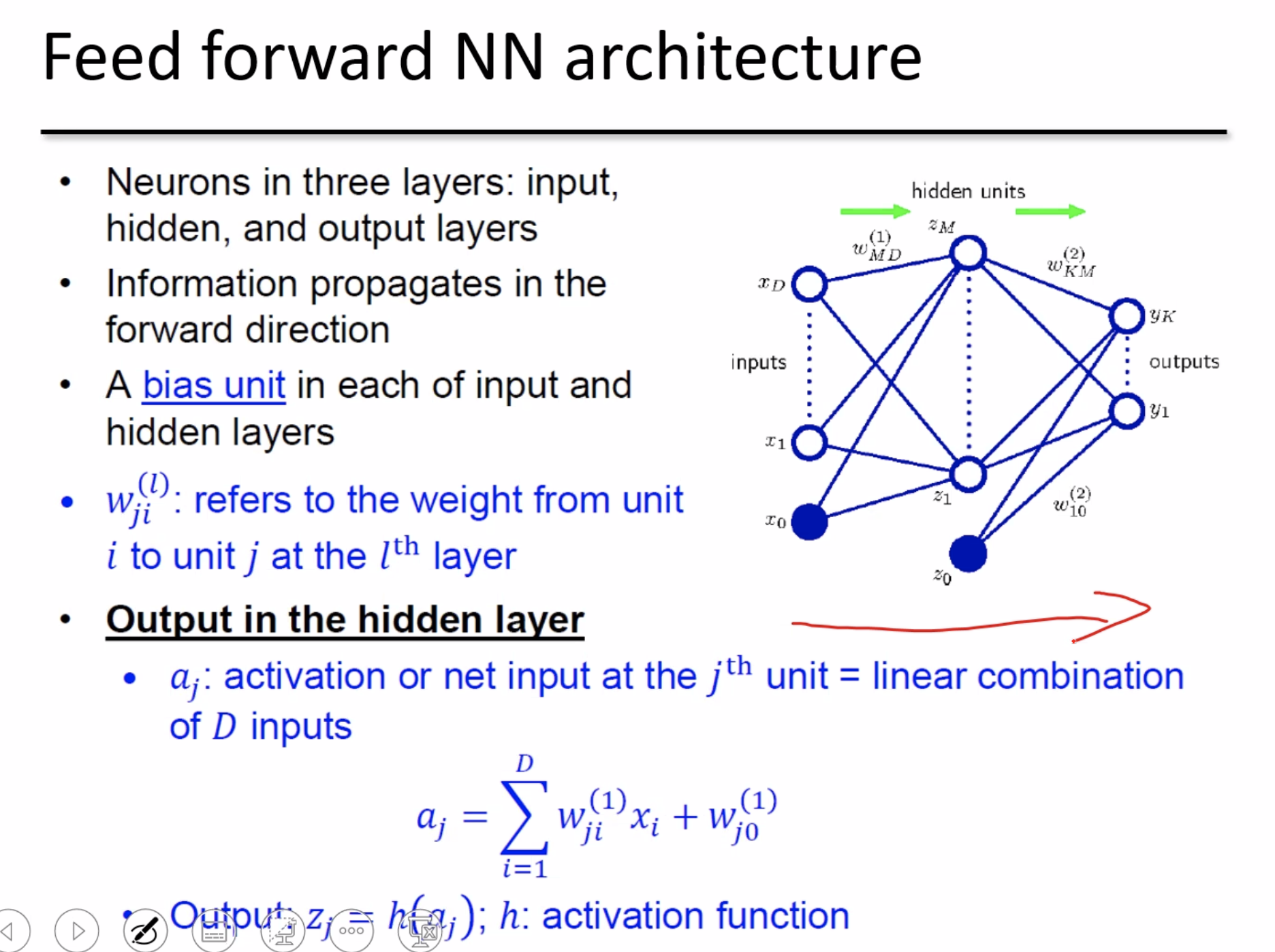

Output in the hidden layer : go back previous layer again. (process, in many layers)

Two types Ouput, probability 0 ~ 1.

Optimize the weights are Training.

M is nodes, hidden layer : M + 1

Hidden Layer,

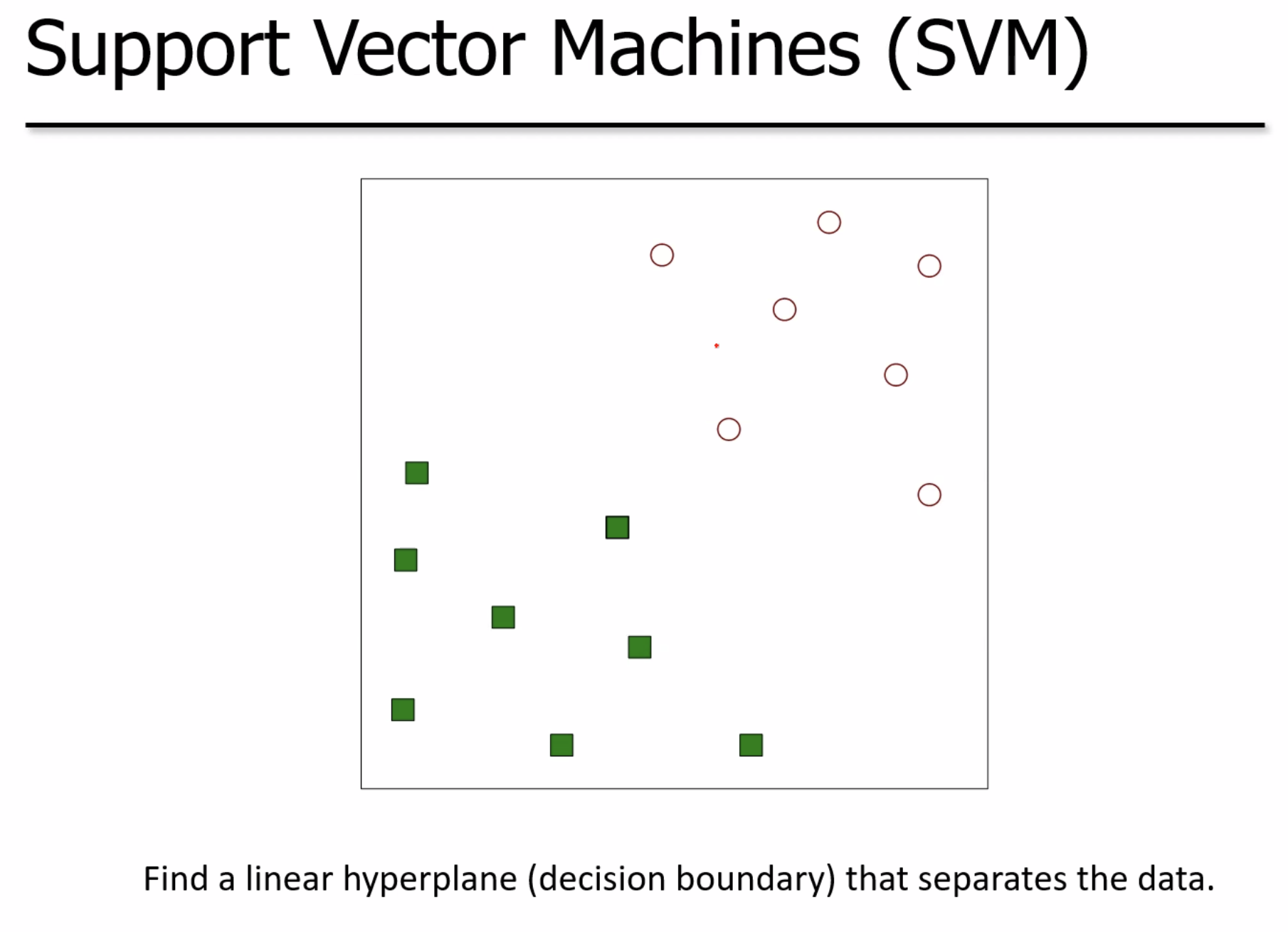

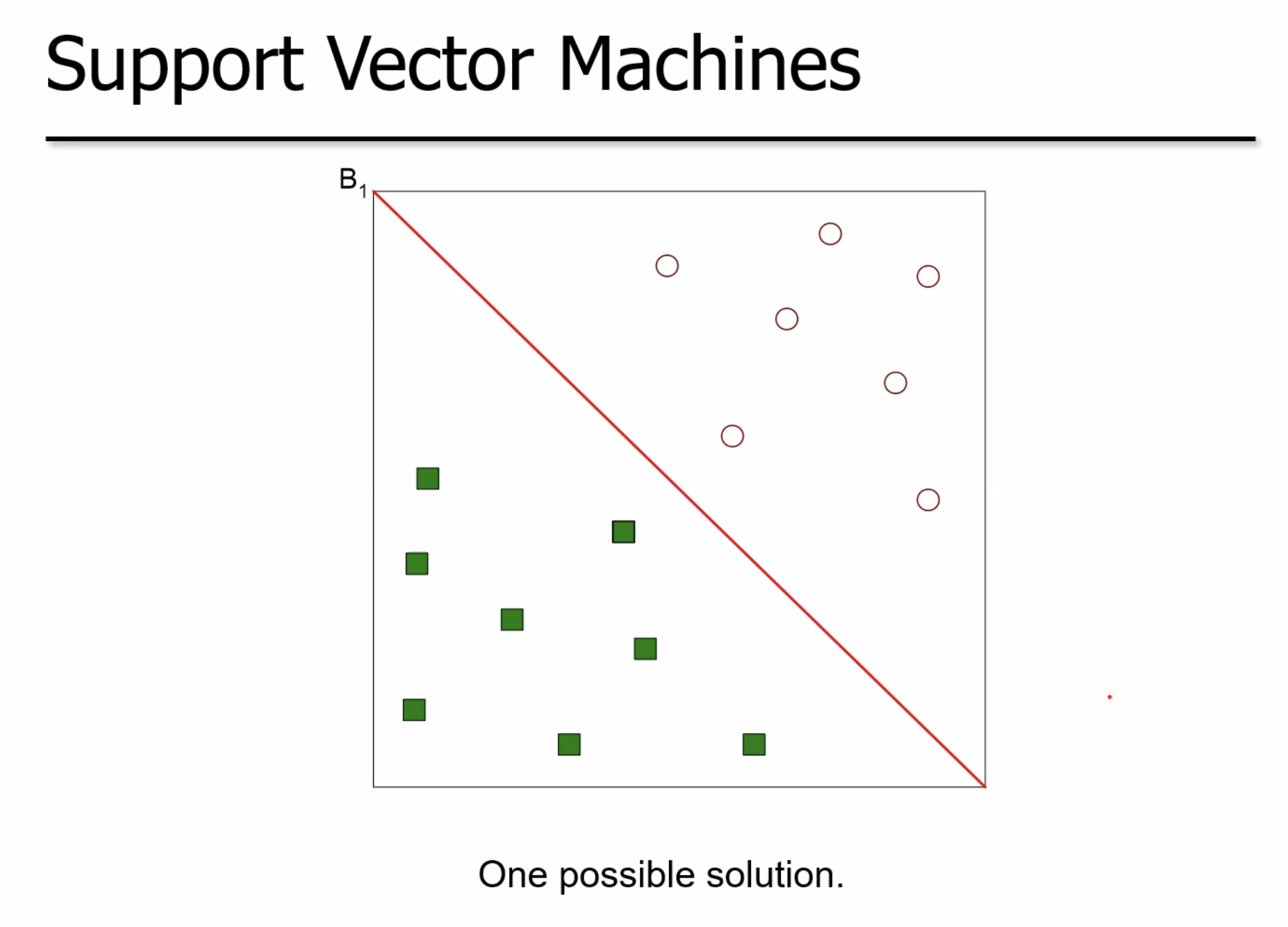

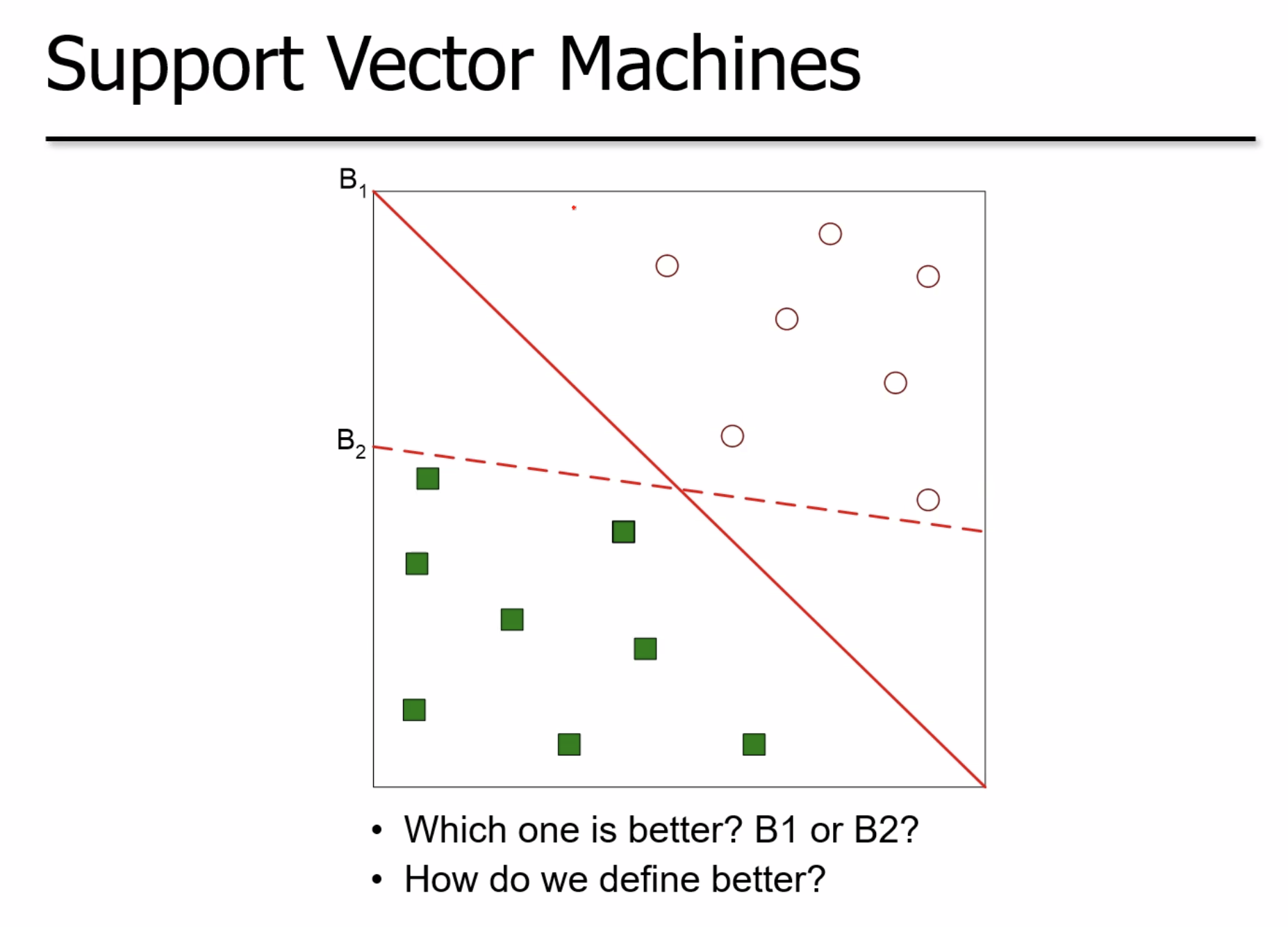

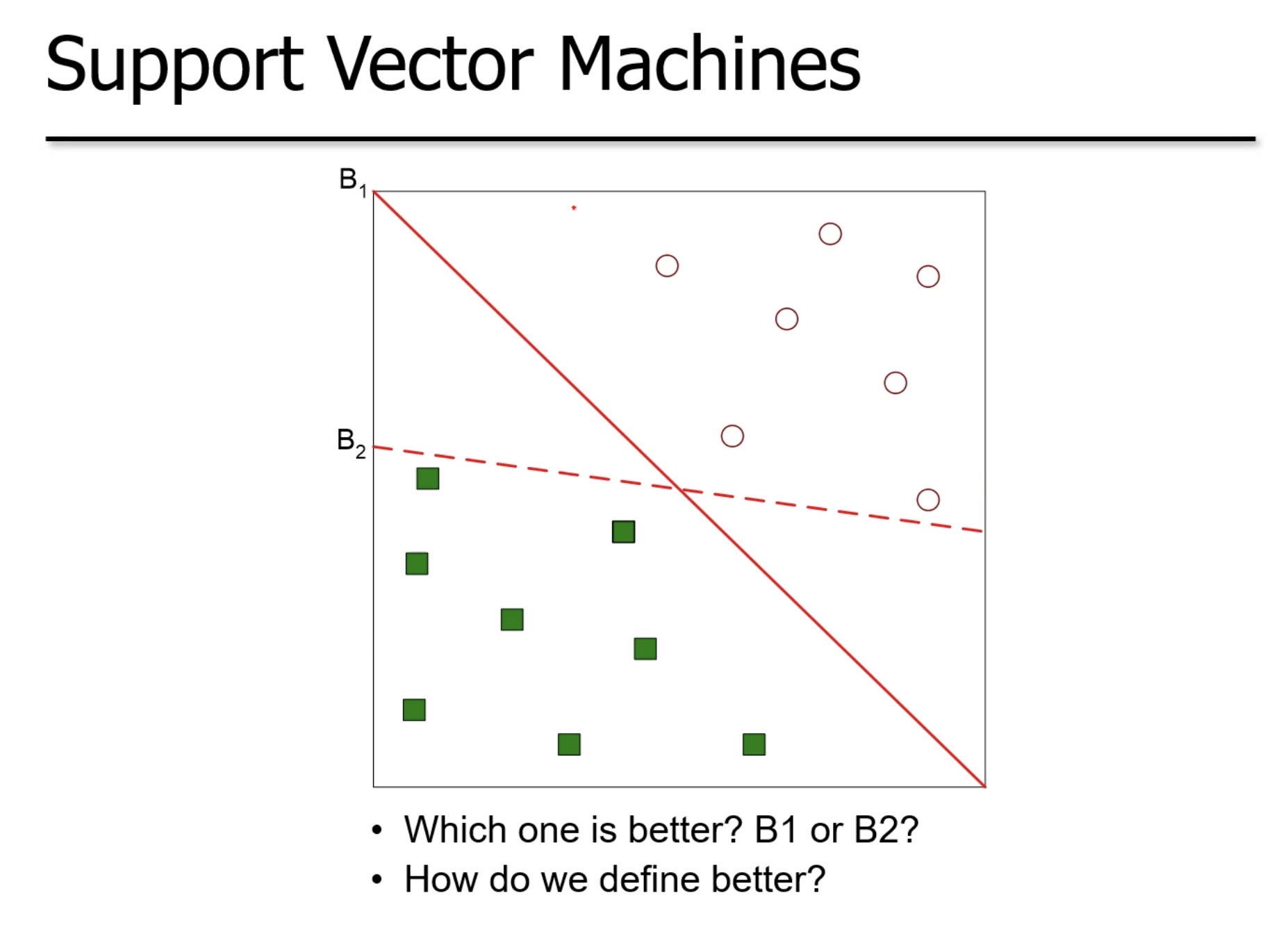

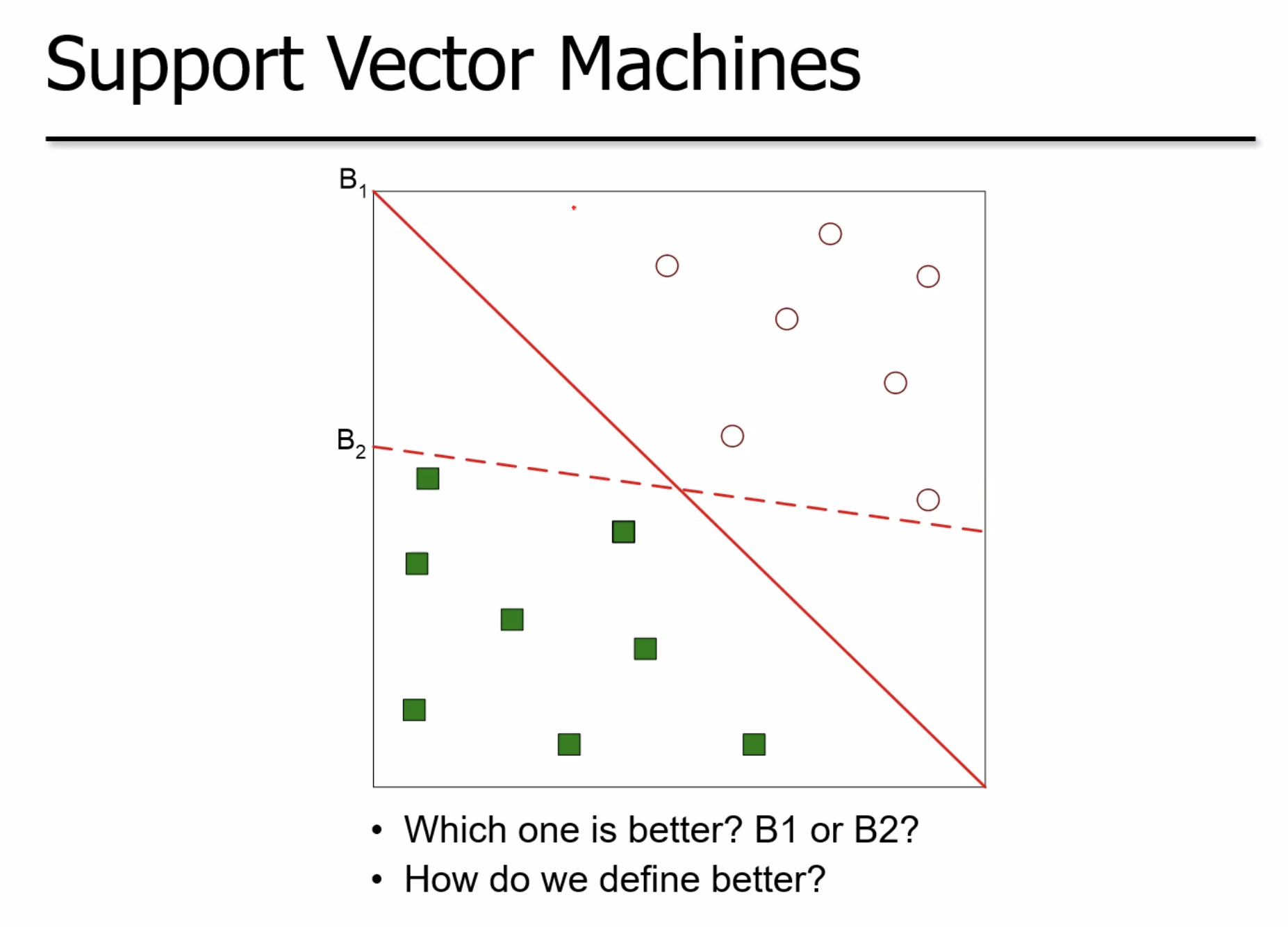

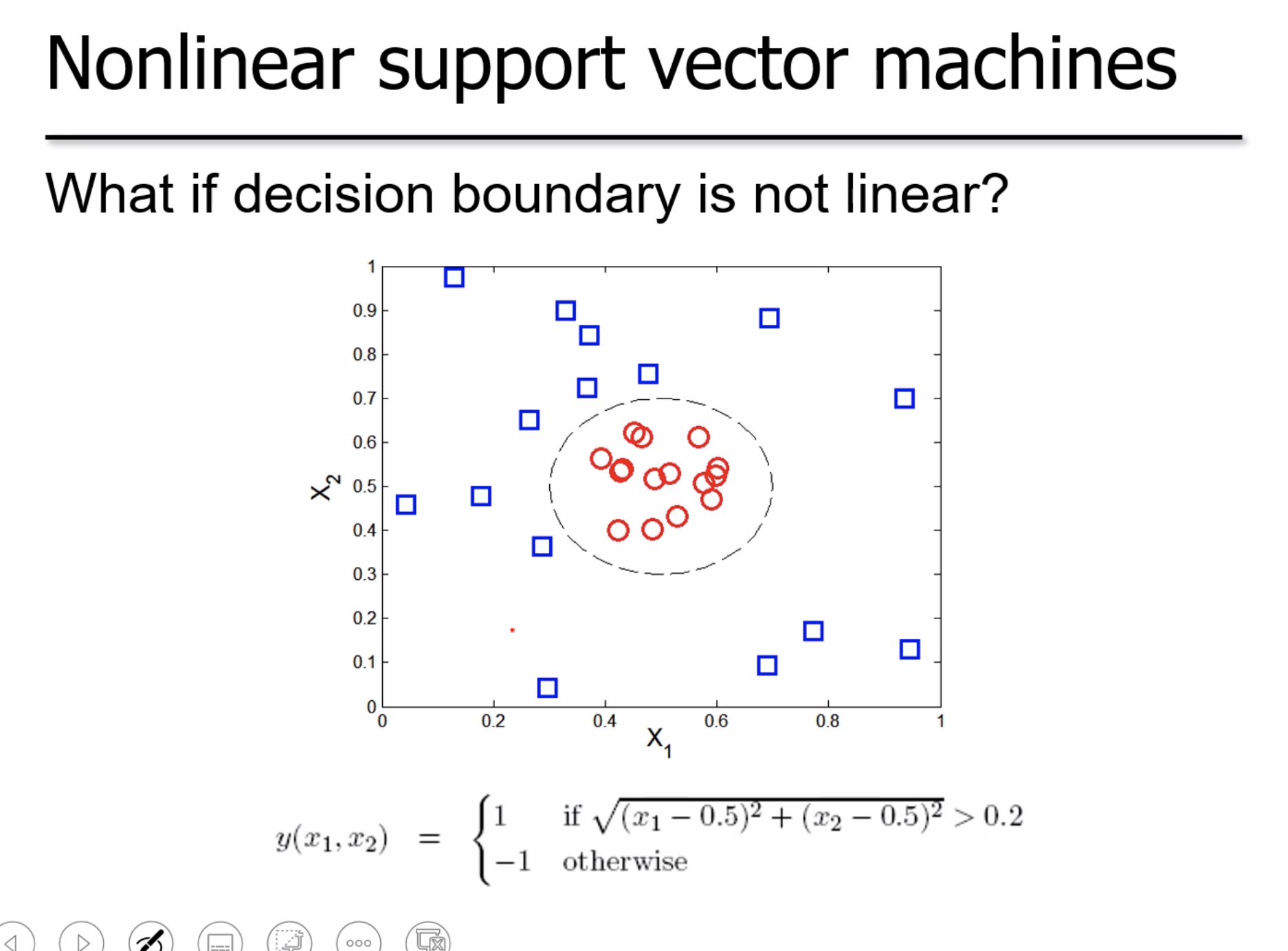

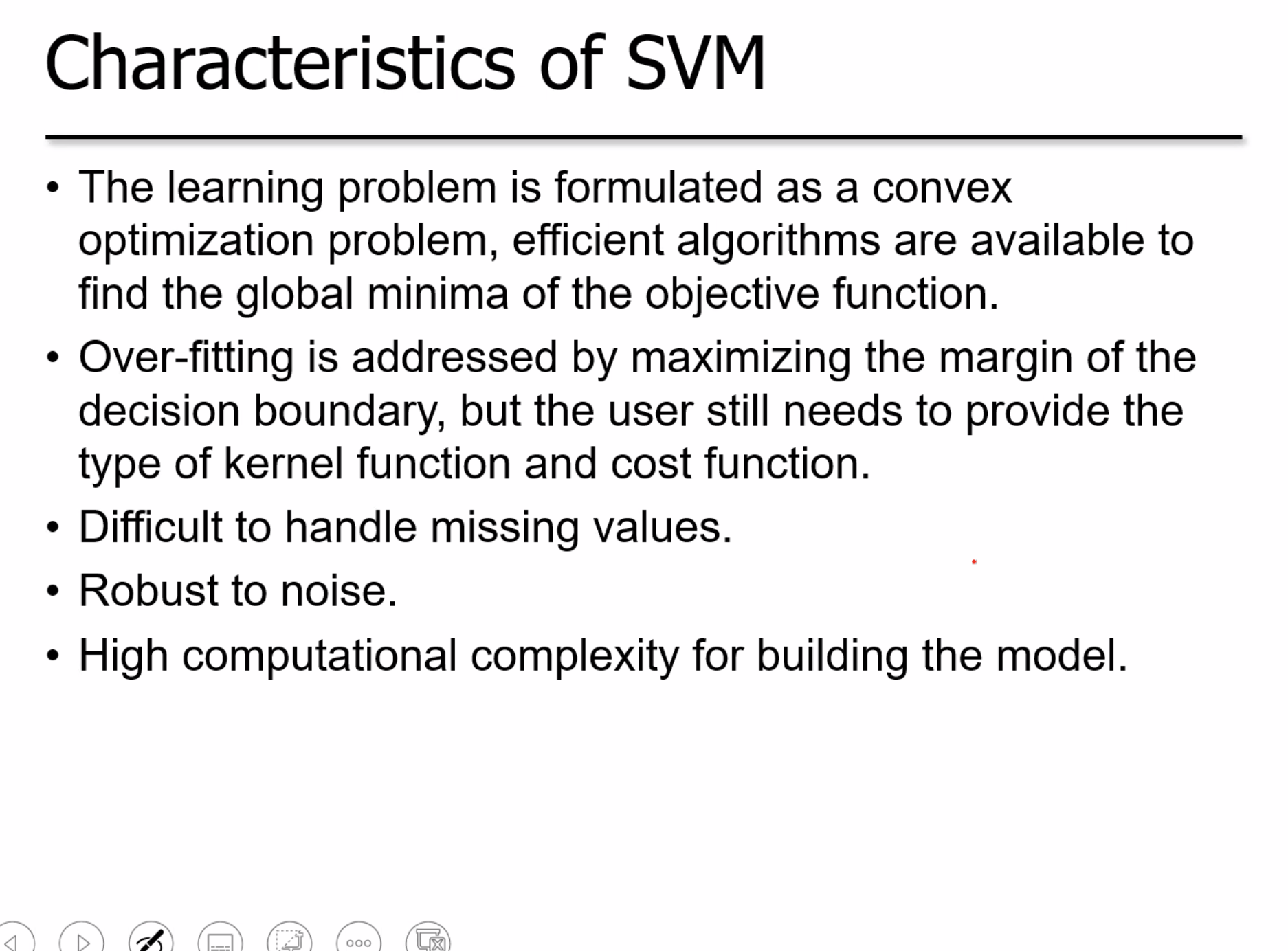

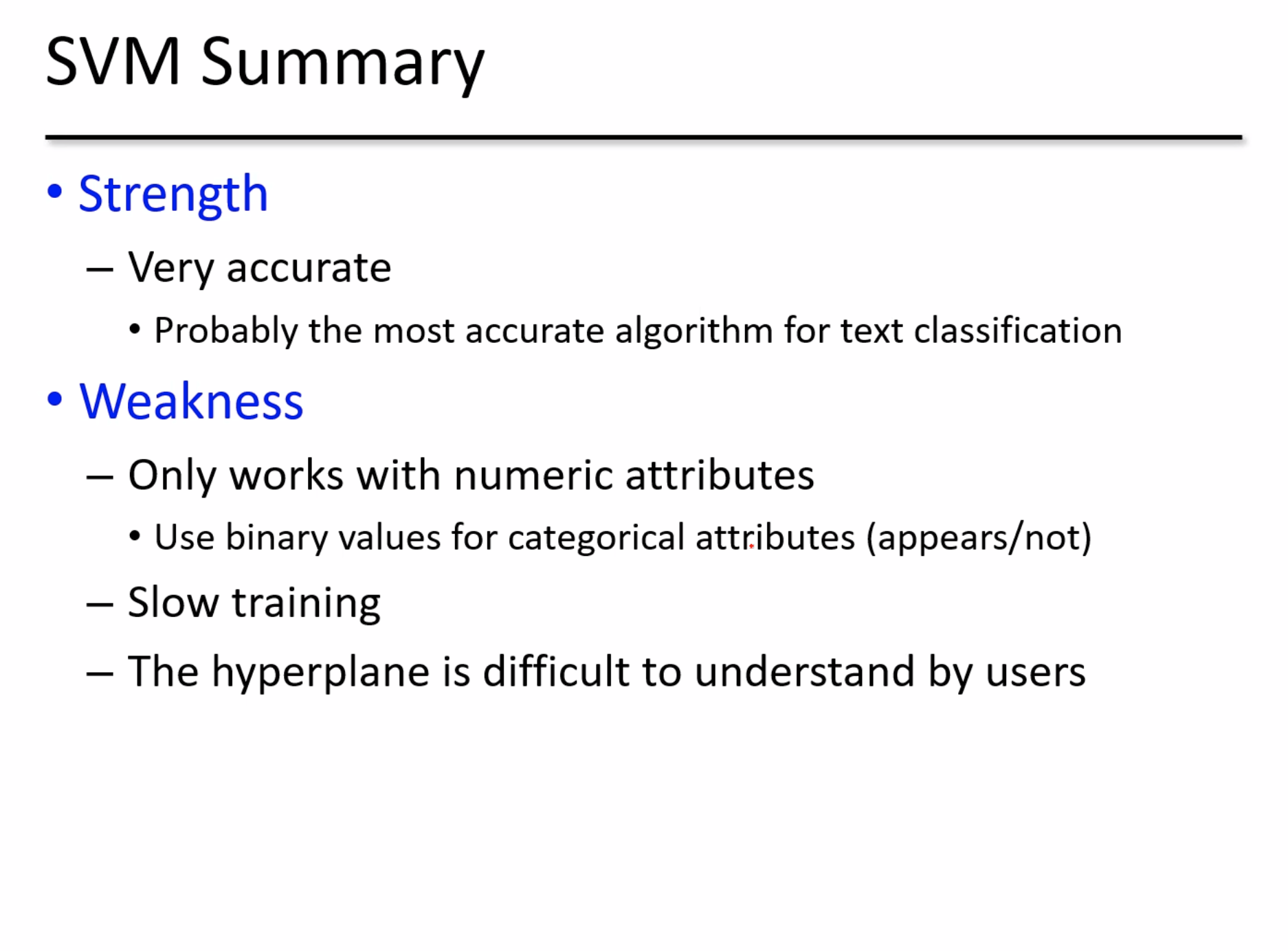

Support vector machhines

'AI Master Degree > Data Mining' 카테고리의 다른 글

| Chap. Bayesian Classifiers (0) | 2021.10.22 |

|---|---|

| Chap 7. Decision Tree 결정 트리 (0) | 2021.10.20 |

| Midterm Preparation : Data Mining (0) | 2021.10.14 |

| Chapter 3. Data preprocessing이란? (0) | 2021.10.12 |

| Chapter 2. Data 타입이란? Missing value란? Outliers란? (Data Mining) (0) | 2021.10.12 |